1. Download kobold.cpp.exe from https://github.com/LostRuins/koboldcpp/releases (I picked the latest version)

2. Download the GGML BIN file for the model(s) you want to use - you can get Llama2 models from https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML - check for which large language models/LLMs are compatible with Kobold, go to the Files tab to find and download the one(s) you want.

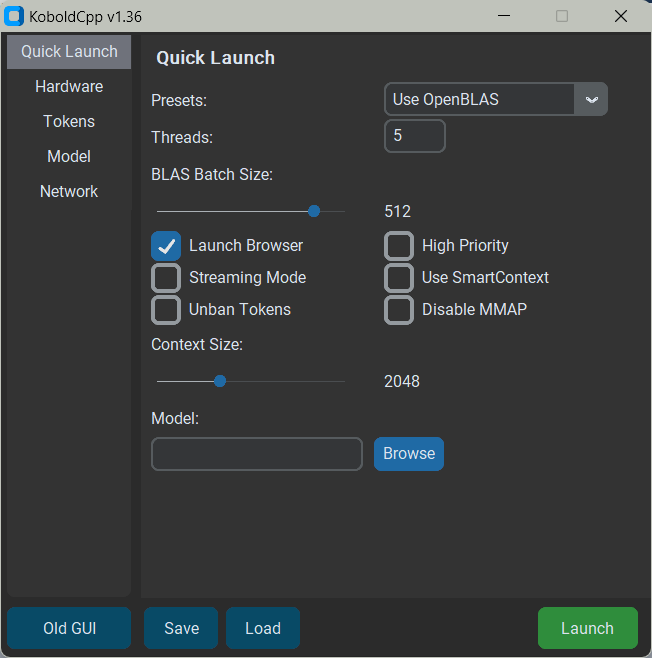

3. For command line avoiders, just doubleclick koboldcpp.exe. A command line interface window and GUI window open up

4. In the GUI window click Model, Browse, select one of the downloaded GGML BIN files then click Launch

6. The command line window stays open, with info on the input prompts, output, processing time etc. Just close it and the browser tab when done. All data stays local to your computer, inputs and outputs etc.

NB. you need a lot of RAM, especially for the bigger models.

Thanks to Autumn Skerritt's helpful blog (which also covers Mac & Linux and has other useful info) - I just added info on the GUI and other possible downloadable models I found.